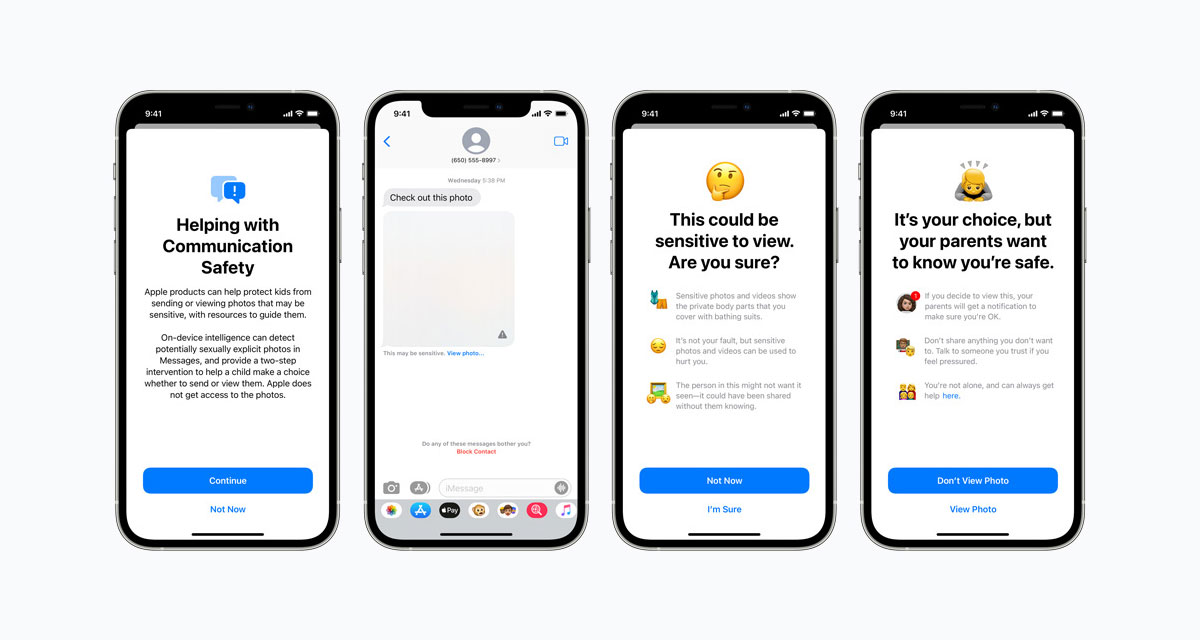

Apple has announced that it will be delaying the controversial CSAM detection system that was detailed last month. Since the system was announced it has come in for heavy criticism by privacy advocates.

In a statement provided to members of the press, Apple said that it will be taking “additional time” over the coming months to “collect input” on where to go from here.

Apple’s CSAM systems were supposed to go live when iOS 15 ships this month.

“Last month we announced plans for features intended to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material. Based on feedback from customers, advocacy groups, researchers and others, we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features.”

The CSAM systems would have seen Apple generate machine hashes associated with photos that were stored in iCloud Photos. If those hashes matched with known CSAM content hashes, law enforcement would be alerted. That would only happen after a threshold of around 30 matches were found and even then, only after manual review — the only time a human would be involved.

It isn’t clear when Apple’s CSAM protections will be re-thought and re-announced.

You may also like to check out:

You can follow us on Twitter, or Instagram, and even like our Facebook page to keep yourself updated on all the latest from Microsoft, Google, Apple, and the Web.

Related Stories

Like this post on Facebook

0 Mga Komento